It’s built on top of Grand Central Dispatch (GCD) and provides a higher-level abstraction for managing concurrent operations.

It’s an abstract class and never used directly. We can make use of the system-defined BlockOperation subclass or by creating your own subclass and start an operation by adding it to an OperationQueue or by manually calling the start method.

The queue automatically manages the execution of operations, executing them in a FIFO (First-In, First-Out) order by default. However, we can change the priority of operations or cancel operations as needed

Operation Queues provide additional features such as built-in support for dependencies and cancelation, making them more suitable for managing complex workflows and operations.

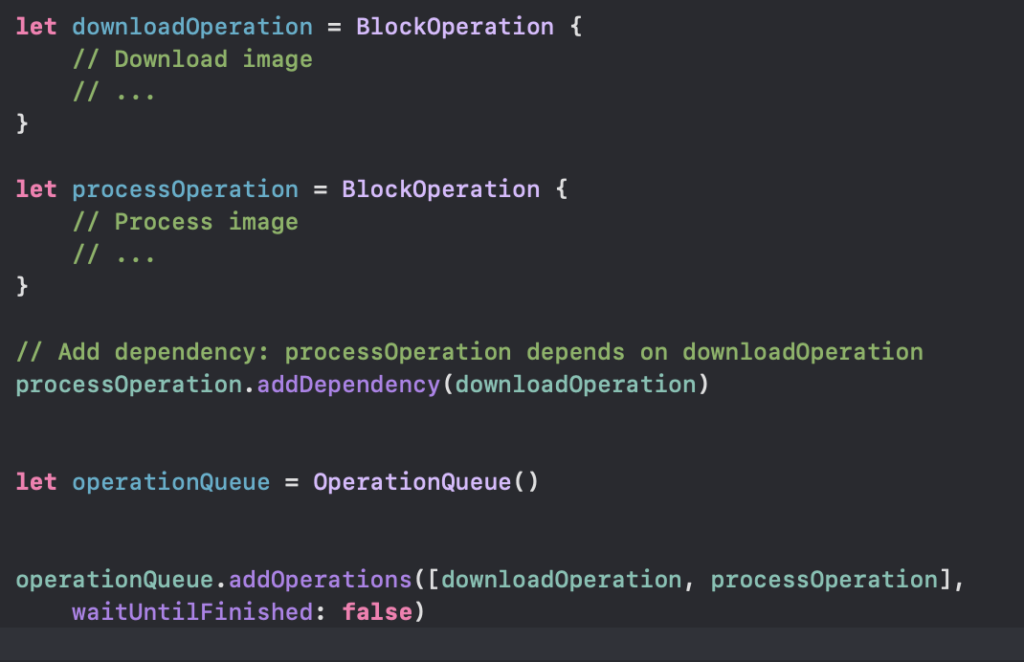

Scenario1:

Imagine you have two tasks: Task A downloads an image from a URL, and Task B processes the downloaded image. Task B should only execute after Task A has completed successfully.

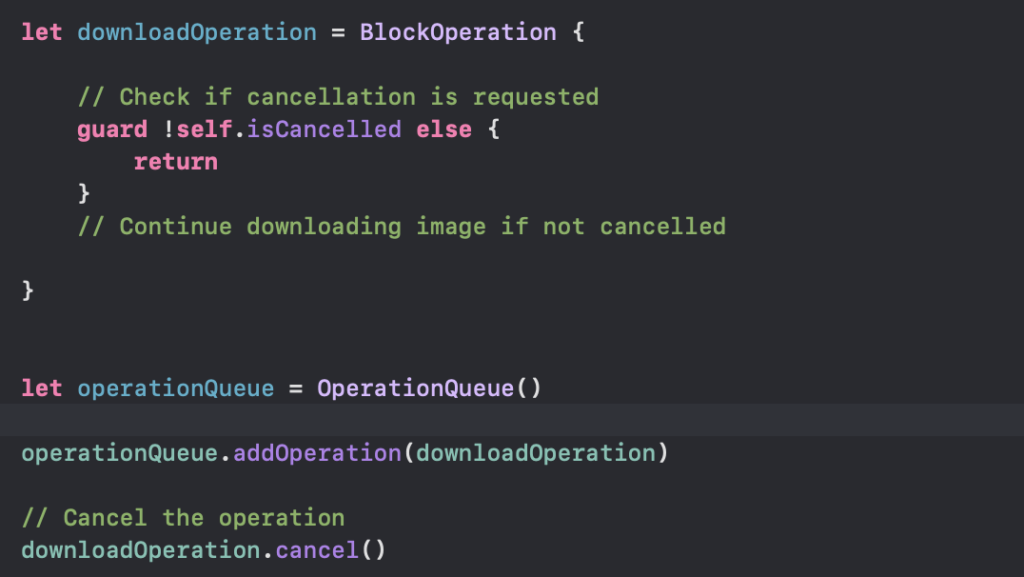

Scenario2:

Suppose the user decides to cancel the image downloading process while it’s in progress.

Explanation:

- Dependency Creation: By using addDependency( ) method, you establish a dependency relationship between processOperation and downloadOperation. This ensures that processOperation will not start until downloadOperation finishes.

- Cancellation: By calling the

cancel()method on the operation, we can request the operation to cancel its execution. However, it’s important to note that this only sets theisCancelledproperty of the operation totrue. It’s up to the operation to check this property periodically during its execution and abort if cancellation is requested.

Operation Queue offers powerful features for managing dependencies between operations and handling cancellation requests gracefully. These features are particularly useful in scenarios where tasks have complex interdependencies or when the user needs to interact with long-running operations.

GCD vs OperationQueue

GCD:

- Doesn’t have built-in support for managing dependencies between tasks. We need to manually handle dependencies by using dispatch groups or nesting blocks.

- Doesn’t have built-in support for cancellation. We need to explicitly check for cancellation within your blocks and return early if needed.

Operation Queue:

- Offers built-in support for managing dependencies between operations using addDependency(_:) method. This makes it easier to define and manage complex task dependencies.

- Provides built-in support for cancellation by setting the isCancelled property of operations. Operations can check this property periodically and abort their execution if cancellation is requested.